Ever wondered whether stereo was good for you? A brilliant wee ‘stereo fixer’ – The Soundstage Activator – sends DR RICHARD VAREY off on a fascinating voyage to discover the truth about the way we listen to music.

As I have reviewed and used a Taket supertweeter product previously, Toshitaka Takei of Taket (Japan) suggested that I might like to try their new hi-fi enhancement product, the Taket Soundstage Activator.

As I have reviewed and used a Taket supertweeter product previously, Toshitaka Takei of Taket (Japan) suggested that I might like to try their new hi-fi enhancement product, the Taket Soundstage Activator.

Takei explained the problem they have solved with this small device – a problem in the way the brain reacts to a stereo sound presentation, and which is inherent in the stereo playback system we take for granted as the normal way to hear recorded music. The Taket SA is designed to be a simple, effective and affordable solution. I liked the sound of it in principle. Would I like the sound of it in practice? One way to find out!

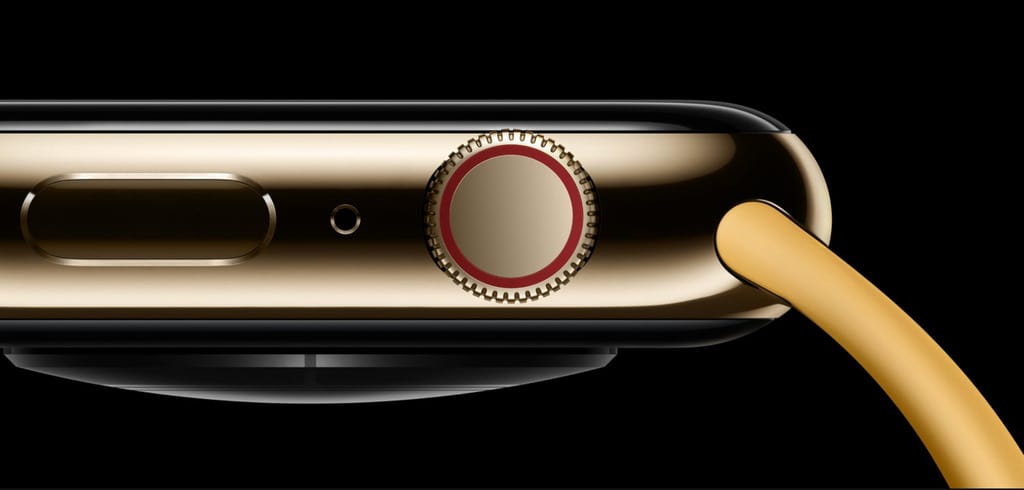

The Soundstage Activator is the result of experimentation, and while I can explain what it is doing and why this is desirable, just why the effect happens is something of mystery to me… yet it is clearly evident and very enjoyable. So investigated further. It’s a tiny black box with a control knob on the front – just 69mm x 87mm xx 38mm and weighing in at only 90g (unbalanced version) and 115g (balanced version). Connection is simply to the loudspeaker terminals at either the loudspeaker or amplifier.

Curious to understand the problem and this simple solution, I connected the Taket SA to my Sachem v2 monoblocks and Audio Pro Avanti DC100 floorstanding loudspeakers. There was an immediate and pleasing change in the soundstage presentation, and this could be audibly adjusted by rotating the control knob. What was happening and why was the enhancement necessary? Here follows an account of my journey of exploration into the quirks of stereo sound recording and reproduction.

So, on the menu for my latest audiophile regalement is a portion of crosstalk, a tiny serving of crossfeed, and an aside of headphones. I wondered if a lower-stereo diet can be both appetising and nourishing?

The problems of stereo listening

The problems of stereo listening

An issue with stereo music imaging arises in the recording stage and another in the playback stage of the musician-to-listener chain, in the mixdown of multiple inputs to two channels, and in loudspeaker placement relative to the listener. A means to adjust the stereo image has been offered by Mr Takei to address this taken-for-granted problem with stereo reproduction.

Trying out this enhancement accessory and getting an instant effect inspired me to look at the whole question of stereo being the best way to hear recorded music. I knew that not everyone agrees, so what’s going on, I wondered? Some audiophiles hear the sound at the center in stereo sound as thin, even as the center is the leading part in the real soundstage in which the recording was made. They discern a rough or misty texture with no space in the stereo soundstaging, and some are resigned to this as inevitable in stereo sound. Some multi-channel systems have been adopted to partly solve this problem, and the soundstage presentation may thus be extended, but it is an opaque and flat soundstaging that is created as a result. So some audiophiles prefer to listen to monaural audio sound.

Recordings were still being issued in mono late into the 1960s, although stereo was patented in 1931. I often pick them up in my record crate digging jaunts, and they sound good on my two-channel system. [Coincidentally, this is being written just as it was announced that Alan Blumlein had been awarded a posthumous Grammy by The Recording Academy in part for his patented work on stereo sound reproduction. He filed the original patent application for a two-channel audio system.]

I’ve heard it said that a stereo image is an outline, while a mono image is the substance of a soundstage presentation. Monophiles prefer single-channel listening. ‘Simulated stereo’ or ‘enhanced’ recordings are pseudo-electronically processed monophonic recordings with phase shift and/or with delay and reverb to make the channels sound different (for example, Capitol Records’ Duophonic discs). Hitting the mono button on a two-channel amplifier does not produce the same sound image as playing a mono recording. In a mono image, voices and instruments are centred. A stereo image has spatial separation of voices and instruments.

True stereo recording uses multiple microphones and the multiple signals are mixed to two channels. This is not the same as several mono recordings spread between two channels. True mono recordings use a single microphone. It’s not natural to hear a sound the same in both ears. At Taket they thought that the centre of a sound source should be distributed equally to left and right stereo channels. However, hesitation is caused in our perception of the sound, as the brain tries to determine if the sound of the channels is the same or not, because there is a micro difference between left and right signals caused by gaps among the recording, the reading of playback media, the phase of the audio signal, and the sound produced due to speaker placement. The Taket folks thought that this is the main reason for the disappointing difference between live sound and stereo recorded sound. If this is so, it might be solved when our brain can confirm that both sound signals of left and right are from the same origin. So, the solution is micro-mixing of the two channel signals from the amplifier just before inputting to the speakers.

The other issue is found in the typical loudspeaker arrangement. The geometry of the speaker location relative to the listener’s ears causes timing and phase differences that create a confused or blurred stereo image. We don’t naturally hear in stereo! A stereo image isn’t natural. A two-channel music playback system only directly mimics situations in which two musicians are located at the same distance from the listener at the distant points of a triangle. That is, there are two large point sources of sound, one emanating from the left channel and the other from the right channel. Importantly, each ear hears slightly different sound. We experience the sensation of delay on sounds from different places. In all other cases where multiple instruments are played, there is an attempt to recreate the soundstage of the recorded assembly, or an artificial image is created in the studio with processing effects and mixing.

Now it gets complicated, as there is often intentionally artificial vocal and instrument separation in two-channel mixing. This is a fundamental characteristic of ‘stereo’ playback using loudspeakers. Recording studios generally mix with near-field speaker configuration, so they don’t hear the full crosstalk effect experienced in the typical home listening setup that is (almost) universally recommended by the loudspeaker manufacturers. The distinction I’m making is of a document of a real performance recreated, and a commercial product reproduced. The typical stereo mixdown creates something diminished. Somehow it’s lessened – something is lost. I noticed in the sleevenotes of an EMI album from 1980 that the recording had been ‘reduced to stereo’, and that neatly states the problem that drives some audiophiles to prefer mono albums. Instead of an artificial representation constructed in left and right separations, mono recordings sound like music in front of you and you get into the depth of the sound, often hearing the characteristics of the room in which the music was recorded. Columbia recordings in the 1950s and 1960s are notable for this.

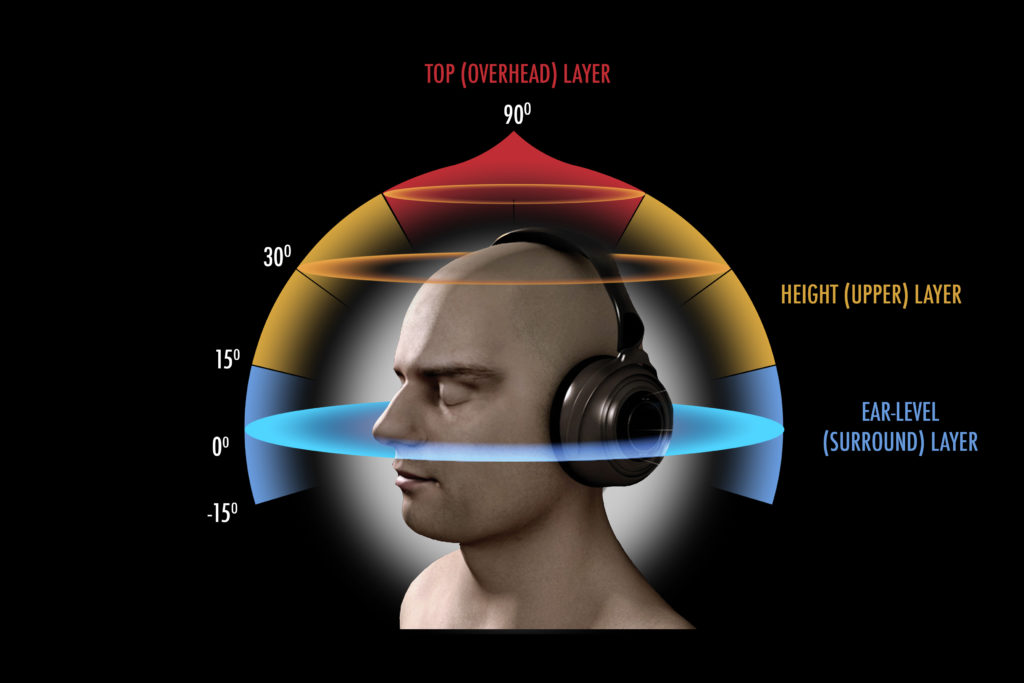

I also read an interview with David Chesky, famed for his binaural recordings, in PS Audio’s Copper magazine. Chesky Records have pioneered binaural recording, always with headphone listening in mind. Such recordings are made with two microphones attached at the ear positions of a dummy head. The reasoning is that two separated ears hear sound sources differently, and according to their relative positions. Sound from a source is detected by both ears with a time difference between the two signals. Musical sources are never point sources – the concept of soundstaging or imaging recognises three-dimensional separation of voices and instruments. So, multiple sources transmit multiple signals that resonate or interfere as they are heard together. This is a very complex problem for the brain to discern the differences, especially as the head blocks some components of the sound, while some part of a sound also travels through the head. Low frequencies are diffracted, whilst medium and high frequencies are reflected, reaching the farthest ear indirectly. Recording and listening with ears and close speakers (headphones), and no distant loudspeakers, creates quite a different experience to typical stereo speaker listening. There is no significant acoustical crosstalk.

I also read an interview with David Chesky, famed for his binaural recordings, in PS Audio’s Copper magazine. Chesky Records have pioneered binaural recording, always with headphone listening in mind. Such recordings are made with two microphones attached at the ear positions of a dummy head. The reasoning is that two separated ears hear sound sources differently, and according to their relative positions. Sound from a source is detected by both ears with a time difference between the two signals. Musical sources are never point sources – the concept of soundstaging or imaging recognises three-dimensional separation of voices and instruments. So, multiple sources transmit multiple signals that resonate or interfere as they are heard together. This is a very complex problem for the brain to discern the differences, especially as the head blocks some components of the sound, while some part of a sound also travels through the head. Low frequencies are diffracted, whilst medium and high frequencies are reflected, reaching the farthest ear indirectly. Recording and listening with ears and close speakers (headphones), and no distant loudspeakers, creates quite a different experience to typical stereo speaker listening. There is no significant acoustical crosstalk.

David Chesky’s team is addressing the stereo-through-loudspeakers problem.He explains that all stereo is flawed because sitting in front of two speakers in a 60 degree triangle introduces interaural crosstalk corruption. He likens it to watching a 3D movie without the special glasses. When listening at home with a hi-fi system, the left ear hears the right speaker and the left speaker at the same time, and the right ear hears the left and right speaker at the same time. This condition is “crosstalk corruption”, and the brain can’t figure out where the sound source images are. David Chesky went on to talk about a technology to cancel the crosstalk in between the speakers with digital sound processors, to create an almost 360 degree soundfield. Chesky’s work with Princeton University on optimized crosstalk cancellation filtering will result in a product for use with loudspeaker pairs. It’ll be expensive!

This led to me finding out more about crosstalk, and then about crossfeed. Crosstalk is the unwanted interference from one channel of a signal into another, and it’s a natural occurrence with conventional loudspeaker setups – it’s all part of the speaker experience though. This is ‘acoustic crosstalk’. Some listeners, including me, and some audio engineers, believe that crosstalk reduces sound quality (see the Ambiophonics web site). Carver’s Hologram, Glasgal’s crosstalk reducers, and Lexicon’s Panorama are designed to reduce acoustic crosstalk.

Differing from crosstalk, crossfeed is an electrical form of intentionally induced and carefully controlled crosstalk. Crossfeed is a feature on some stereo amplifiers that designers include to try to add crosstalk on headphone listening. During recording and mixing the two stereo channels are separate, yet in playback through loudspeakers, each ear hears both speaker outputs – this doesn’t happen with headphone listening. In headphone listening, hearing two separate channels that have been mixed to create a soundstage presentation centred between the speakers produces an odd effect.

Differing from crosstalk, crossfeed is an electrical form of intentionally induced and carefully controlled crosstalk. Crossfeed is a feature on some stereo amplifiers that designers include to try to add crosstalk on headphone listening. During recording and mixing the two stereo channels are separate, yet in playback through loudspeakers, each ear hears both speaker outputs – this doesn’t happen with headphone listening. In headphone listening, hearing two separate channels that have been mixed to create a soundstage presentation centred between the speakers produces an odd effect.

Headphone listening is different

With headphones, there is no acoustic crosstalk because the left ear hears only the left phone and the right ear hears only the right phone. HeadRoom, for example, in their headphone amps, provide a crossfeed circuit that introduces crosstalk. Some people like the effect of crossfeed because it often makes the music sound more coherent and live. When you go to listen to live musicians play, it’s impossible for them to transmit an isolated channel into one ear and another isolated channel into the other as headphones can. However, certain types of music don’t need crossfeed, which can make the music sound more distant, less impactful, and less detailed.

Back in the 1970s a UK hi-fi magazine (I can’t recall which one) published a DIY project for a circuit they called the Headphoney. This countered the unnatural effect of music seeming to emanate from inside the head when listening to stereo recordings on headphones. It did this by introducing some frequency-dependent phase differences and mixing them between channels. I assembled one, and still have it, so can confirm that the effect is to widen the soundstage presentation and to shift it firmly outside the head, apparently into the space surrounding the head.

Activating the soundstaging

Does the Taket SA somewhat counter crosstalk corruption by adding micro-crossfeed? I’m thinking of it as a loudspeaker user’s version of the crossfeed device, only reducing or partially removing instead of adding crosstalk. The Taket Soundstage Activator micro-mixes the amplifier’s two output channels to shift away from separated channels to something approaching mono, evidently with good effect on listening pleasure, so I’ve (literally) heard.

The Taket Soundstage Activator counters crosstalk effects for loudspeaker listening, and for all sources as the effect is added at the amplifier output, but using a rather different engineering principle that has been developed through a range of enhancement products by Taket themselves. Drawing on previous product experience, and through experimentation, the Soundstage Activator is a passive variable delayless micro-crossfeeder.

Adjustable micro-mixing blends a tiny (millionth part, hence ‘micro’) signal from each channel signal into the other channel. At the extreme, a pseudo-mono effect is produced. Such a small amount of signal mixing is effective in overcoming the loudspeaker ‘blurring’ problem to some degree. The Taket Soundstage Activator is designed to accomplish an enhanced sound quality by mitigating the artificiality of the ‘two-sound-sources’ effect. This, it is claimed, tightens and deepens bass, enhances midrange three-dimensionality, and sweetens the treble range, producing overall a more natural sound that the human brain can better understand than the contrived two separate channel point sources. Taket is known for piezoelectric supertweeters, and like them this device is simply connected across the amplifier outputs and adjusted for personal taste. The effect at any given setting will vary among recordings according to how instruments are positioned in the mix across the two channels, so adjustment of the device setting will produce a different audio image.

Various software tools have been developed with similar effect, for example in the Digital Signal Processing options of JRiver Media Center, although these are only applicable for playing digital files, whereas the Taket Soundstage Activator acts on all output from the amplifier to which it is connected.

Audition

What did it do when connected to my system? On first switching the control knob from the off position and rotating to the suggested position 3 on the 1 (stereo = L, R) to 12 (‘mono’ = L+R, R+L) scale, the effect was noticeable but quite subtle. However, on prolonged listening, I clearly heard a stereo image with instruments and voices well discerned. And there was more. Whatever it was doing, I immediately liked what I was hearing. Yes, surely, the image was opened up somewhat and there was additional ‘life’ in the music. The ‘stereo corrector’, as I am calling it, adds weight and additional micro-dynamic resolution, making the playback more engaging. Just as I have experienced with the Taket BATPure supertweeter, the effect is in finessing the way the music makes sense, rather than in tonal colouring, as say with a tone control or equaliser. The sound is just more meaningful as a musical experience. By that I mean it sounds more like instruments and voices and less like a stereo recording. The presence is uplifted and the soundstage presentation is more open and coherent. Indeed, the soundstaging is ‘activated’.

I wondered if the effect of the Soundstage Activator differs among obviously studio-contrived and complex stereo soundstage presentations and simple natural live recordings. Good candidates for an initial comparison came to mind readily: any Moby album and The Cowboy Junkies’ revered Trinity Session. I concluded that the former is obvously contrived and the latter so natural and present. Then I compared a live concert recording made with two distant microphones with a studio-constructed sound-designed album, Steven Wilson’s Hand.Cannot.Erase. His previous album The Raven was recorded live in the studio, as was Pistolero from Frank Black & The Catholics. This liveness was clearly discernable, and very enjoyable. I also listened to some of David Elias’ live one or two microphone recordings which he issues in DSD format. My comparison ‘engineered’ live recordings of choice were Thin Lizzy’s Live And Dangerous and Peter Frampton’s Comes Alive! Without exaggeration, I can say that a ‘real’ performance is so fresh compared with a ‘product’. The ‘natural’ image seems cleaner and more dynamic, making the listening experience that much more engaging.

Thank you to Toshitaka Takei for bringing this device and effect to my notice, and for his friendly co-operation in supplying a sample of the Soundstage Activator and patiently answering my queries and questions. In summary, the soundstaging activation is instantly effective, the unit is unobtrusive and is easily fitted and requires no expensive or special wiring. It’s also a very affordable listening upgrade. What’s not to like? I have had the device connected for several months, and it is now a permanent enhancement to my listening pleasure. Highly recommended.

* Price for the balanced version is around $NZ310, and the unbalanced version $NZ248, at the time of publication. Further details at Taket.

I give you all a clue, even though I not got a clue to exact details, our ears are biased, they need to be to determin direction somehow. When listening to sound, we have a sweetspot but just like the L/R balance adjuster that can shift the perception to how we recieve the sound, if we move our location from the sweetpot this is no longer good enough. What we ideally need is to play left signal on right speaker and right on left but at a level difference that would indicate ear differences between left and right to try and make the crosstalk of the sound to create the original frequency/time between the two using the crosstalk as our direct perception. Each ear perceives a different perspective in which it has it’s own dividing accoustic pattern creator for different directions. We need to not only send signals to opposite speakers but adjust the frequencys using an equaliser to give a correctly perceived direct sound of the original frequency using the adjusted crosstalk, One ear will perceive the frequency differently to the other ear for direction reasons. We need to play it as part bass perspective and part treble and the crosstalk combines to create the same frequency perception for each ear no matter where you listen. A multi perspective that comes together and sounds like a single perspective on perception. The whole image will twist and face you as you move left or right of the speakers so you become the center of it all. Infinite sweetspot. It’s just a frequency perception illusion, the frequency is altered and aligned differently but then compensated for by interference to bring it back to original frequency at the ears. Best way to see this is put on some anaglyph red/blue 3d glasses and look at 3d pictures. Move left and right and it follows you due to the way our eyes (ears) perceive, both move equally together, the eye is curved and a different part of retina perceives the images but both turn by same amount (same as both frequency interference change equally for both ears on perception but the secondary interference is same as original). Very hard for me to explain but it is all about the pattern and direct perception and making the differences apear to be the same. It is to do with the frequency being played and the way the next frequency plays in relation. I like to call it phase linearity. I’m not good with explaining but I’ve heard it done and good recordings become magical. I personally believe that equalisation is required due to the way our ears work for distances. It all needs to be played back in 3d not 2d to perceive the sounds as if they are in the real location in our minds. The same frequency pattern repeated tells us it’s coming from same location. Hope you can make sense of what I wrote.

You’re only half right about frequency determining the location of a sound. What the brain actually does is use the PHASE of a waveform to determine location – when a sound hit’s the ear, the brain measures the time it takes to hit the other ear and calculates the distance and direction the sound came from.

You are only right about the frequency of the waveform in that it only works in the higher frequencies where the waveforms are close to to size of our head. This overlaps with the segment of the audio spectrum where sibilance occurs is speech.

Lower frequencies have waveforms that are very large – the lowest sound we can theoretically hear has a waveform of 32 metres – far too large to hit each ear separately.